– By Vaibhav Agrawal

The theory of artificial intelligence has gone through half a dozen cycles of boom and bust: periods when it was said confidently that computers will soon attain human level intelligence and periods of disillusionment when this seemed nearly impossible. Today, we are in the latest boom period and some visionary computer scientists are going even further, asking when “AI”s (using the abbreviation AI to make the machine sound like a new life-form) will actually attain not merely human intelligence but possess our consciousness as well. Other futurists ask for a wilder, crazier life altering boon: can I live forever by having my brain and my consciousness downloaded into silicon, essentially a person metamorphosing into an AI? In the boom of a previous cycle, the wild prediction was that we were headed for “the singularity,” a point in time when super-AIs will create a wholly new world that leads to the extinction of the now superseded human race (predicted by some to happen around 2050). I plead guilty to personally hoping that I would be a witness when the first computer attained consciousness. But now I am quite a bit more skeptical. Perhaps this is the negativity of old age but perhaps too it is because I see this question as entraining issues not only from computer science but also from biology, physics, philosophy and, yes, from religion too. Who has the expertise to work out how all this impacts our understanding of consciousness? And even talking about the relevance of religion to any scientific advance is anathema to today’s intelligencia. But just consider this: is there a belief system in which the Silicon Valley dream that humans will soon be able to live forever and the Christian credo of “the immortality of the soul” are both true? For me, these two beliefs seem to live in separate universes.

What’s missing in state-of-the-art AI?

Let me start by making some comments on the present AI boom and why it may lead to a bust in spite of its successes. The central player in the codes that support the new AI is based on an algorithm called a neural net. Every net, however, uses zillions of parameters called its weights that must be set before it can do anything. To set them, it is “trained” using real world datasets by a second algorithm called back propagation. The resulting neural net then takes a set of numbers representing something observed as its input and it outputs a label for this data. For example, it might take as input an image of someone’s face represented by its pixel values and output its guess whether the face was male or female. Training such a net requires feeding the net with a very large number of both male and female faces correctly labeled male or female and successively modifying the weights to push it towards making better predictions. Neural nets are a simple design inspired by a cartoon version of actual cortical circuits that goes back to a classic 1943 article of McCulloch and Pitts. More importantly, in 1974, Paul Werbos wrote a PhD thesis introducing back propagation in order to optimize the huge set of weights by making them work better on a set of inputs, i.e. a dataset that has been previously labeled by a human. This was played for 40 years and promoted especially by Yan LeCun with some success. But statisticians were skeptical it could ever solve hard problems because of what they called the bias-variance trade-off. They said you must compare the size of the dataset on which the algorithm is trained to the number of weights that must be learned: without enough weights, you can never model a complex dataset accurately and if there are enough weights, you will model peculiar idiosyncrasies of your dataset that aren’t going to be representative of new data. So what happened? Computers got really fast so neural nets with vast numbers of weights could be trained and datasets got really large thanks to the internet. Mirabile dictu, in spite of statistician’s predictions, the algorithm worked really well and somehow, magically avoided the bias-variance problem. I think it’s fair to say no one knows how or why they avoid it. This is a challenge for theoretical statisticians. But neural nets are making all kinds of applications really work, e.g. in vision, speech, language, medical diagnosis, game playing and applications previously thought to be very hard to model. To top it off PR-wise, training these neural nets has now been renamed deep learning. Who could doubt that the brave new world of AI has arrived?

BUT there is another hill to climb, the belief that thinking of all kinds requires grammar. What this means is that your mind discovers patterns in the world that repeat though not necessarily exactly. These patterns can be visual arrangements in the appearance of objects, like points in a line or the position of eyes in a face, or they can be the words in speech or simple actions like pressing the accelerator when driving or even abstract ideas like loyalty. No matter what type of observation or thought carries the pattern, you expect it to keep reoccurring so it can be used to understand new situations. As adults, everything in our thoughts is built from a hierarchy of the reusable patterns we have learned and a full scene or event or plan or thought can be represented by a “parse tree” made up of these patterns. But here’s the rub: in its basic form, a neural net does not find new patterns. It works like a black box and doesn’t do anything except label its input, e.g. telling you “this image looks like it contains a face here”. In finding a face, it doesn’t say — “first I looked for eyes and then I knew where the rest of the face ought to be”. It just tells you its conclusion. We need algorithms that output: “I am finding a new pattern in most of my data, let’s give it a name”. Then it would be able to output not just a label but a parse of the parts that make up its input data as well. Related to this desideratum, we are able to close our eyes and imagine what a car looks like, with its wheels, doors, hood etc., that is we can synthesize new data. This is like running the neural net backward, producing new inputs for each output label. Attempts to soup up neural nets to do this are ongoing but not yet ready for prime time. How hard it is to climb this hill is an open question but I think we cannot get near human intelligence until this is solved.

If artificial intelligence is to demonstrate human intelligence, we had better define what human intelligence really consists of. Psychologists have, of course, worked hard to define human intelligence. For a long time, the idea that they could pin this down with a numerical measure, the IQ, held sway. Or does intelligence mean solving “Jeopardy” questions?; remembering more details about more events in your life?; composing or painting more skillful works? Yes, sure it might, but wait a minute: what humans are uniquely good at and what a large proportion of our everyday thoughts concern is guessing what particular fellow human beings are feeling, what are their goals and emotions and even: what can I say to affect his/her feelings and goals so I can work with him/her and achieve my own goals? This is the skill that, more often than not, determines your success in life. Computer scientists have indeed looked at the need to model other “agents” knowledge and plans. A well-known example is given by imagining two generals A and B on opposite mountain tops needing to attack an enemy in the valley between them simultaneously but only able to communicate by sneaking across enemy lines. A sends a message to B: “attack tomorrow?,” B replies “yes”. But B doesn’t know his reply got through and A must send another message to B that he did get the earlier message to be sure B will act. More messages are needed (In fact, there is no end to the messages they need to send to achieve full common understanding.) Computer scientists are well aware that we need to endow their AI with the ability to maintain and grow models of what all the other agents in its world know, what are their goals and plans. This must include knowing what they themselves know and what they don’t know. But arguably, this is all doable with contemporary code.

We need Emotions #$@*&!

However, in this game-theoretic world, an essential ingredient of human thought is missing: emotions. Without this, you’ll never really connect to humans. I find it strange that, to my knowledge, only one computer scientist has endeavored to model emotions, namely Rosalind Picard at the MIT Media Lab. Even the scientific study of the full range of human emotions seems stunted and largely neglected by many disciplines. For example, Frans de Waal, in his recent book Mama’s Last Hug about animal emotions, says, with regard to both human and animal emotions:

We name a couple of emotions, describe their expression and document the circumstances under which they arise but we lack a framework to define them and explore what good they do.

(Is this possibly the result of the fact that so many who go into science and math are on the autistic spectrum?) One psychologist clearly pinpointed the role emotions play in human intelligence. Howard Gardner’s classic book Frames of Mind: The Theory of Multiple Intelligences introduces, among a variety of skills, “interpersonal intelligence” (chiefly understanding others’ emotions) and “intrapersonal intelligence” (understanding your own). This is now called “emotional intelligence” (EI) by psychologists but, as de Waal said, its study has been marred by the lack of precise definitions. A recent “definition” in Wikipedia’s article on the EI is:

Emotional intelligence can be defined as the ability to monitor one’s own and other people’s emotions, to discriminate between different emotions and label them appropriately, and to use emotional information … to enhance thought and understanding of interpersonal dynamics.

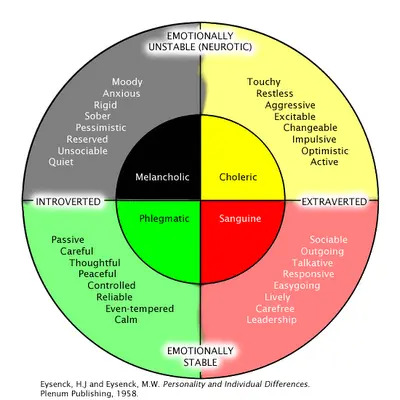

The oldest approach to classifying emotional states is due to Hippocrates: the four humors, bodily fluids that correlated to four distinct personality types and their characteristic emotions. These were: sanguine (active, social, easy-going), choleric (strong-willed, dominant, prone to anger), phlegmatic (passive, avoiding conflict, calm), melancholic (brooding, thoughtful, can be anxious).

They are separated along two axes. The first axis is extravert vs. introvert, classically called warm vs. cold with sanguine/choleric being extraverted and phlegmatic/melancholic being introverted. The second axis is relaxed vs. striving, classically called wet vs. dry, sanguine/phlegmatic being relaxed, choleric/melancholic always seeking more. This classification was developed in recent times by Hans Eysenck, whose colorful version is here:

The modern study of emotions goes back to Darwin’s book The Expression of the Emotions in Man and Animals where he used the facial expressions that accompany emotions in order to make his classification. His theories were extended and made more precise by Paul Ekman and led to the theory that there are six primary emotions each with its distinctive facial expression, Anger, Fear, Happiness, Sadness, Surprise and Disgust and many secondary emotions that are combinations of primary ones, with different degrees of strength. Robert Plutchik has extended the list to eight primary emotions, named weaker and stronger variants and some combinations, There really is an open-ended list of secondary emotions, e.g. shame, guilt, gratitude, forgiveness, revenge, pride, envy, trust, hope, regret, loneliness, frustration, excitement, embarrassment, disappointment, etc., etc. which don’t seem to just blend but rather grafts of emotions onto social situations with multiple agents and factors intertwined. Frans de Waal in his book (p.85), referring to the above list, defines emotions by:

An emotion is a temporary state brought about by external stimuli relevant to the organism, It is marked by specific changes in body and mind — brain, hormones, muscles, viscera, heart, alertness etc. Which emotion is being triggered can be inferred by the situation in which the organism finds itself as well as from its behavioral changes and expressions.

A quite different approach has been developed by Jaak Panksepp, e.g. in his and Lucy Biven’s book The Archeology of Mind: Neuroevolutionary Origins of Human Emotions. Instead of starting from facial expressions, his approach is closer to Greek humor. Panksepp for a long time has been seeking patterns of brain activity, especially sub-cortical activity and the different neuro-transmitters sent to higher areas, that lead to distinct ongoing affective states and their corresponding activity patterns. Their list is quite different from Darwin’s through partially overlapping. They identify 7 primary affective states:

- seeking/exploring

- angry

- fearful/anxious

- caring/loving

- sad/distressed

- playing/joyful

- lusting

An aside: I am not clear why he does not add an 8th affective state: pain. Although not usually termed an emotion, it is certainly an affective state of mind with subcortical roots, a uniquely nasty feeling and something triggering specific behaviors as well as causing specific facial expressions and bodily reactions.

No wonder de Waal said that as yet there is no definitive framework for emotional states. Perhaps what is needed to make a proper theory, usable in artificial intelligence code, is to start with massive data, the key that with neural networks now unlocks so much structure in speech and vision. The aim is to define three-way correlations of

(i) brain activity (especially the amygdala and other subcortical areas but also the insula and cingulate area of cortex),

(ii) a bodily response including hormones, heartbeat (emphasized by William James as the core signature of emotions) and facial expression and

(iii) social context including immediate past and future activity.

An emotional state should be defined by a cluster of such triples — a stereotyped neural and bodily response in a stereotypical social situation. To start we might collect a massive dataset from volunteers hooked up to IVs and MRIs, listening to novels through headphones. I am reminded of a psychology colleague whose grad students had to spend countless hours in the tube in the wee hours of the night when MRI time was available. Like all clustering algorithms, this need not lead to one definitive set of distinct emotions but more likely a flexible classification with many variants. All humans seem to recognize nearly the same primary and secondary emotions when they occur in our friends and artificial intelligence will need to be able to do this too. Without this analysis, computer scientists will flounder in programming their robots to mimic and respond to emotions in their interactions with humans, in other words to possess the crucially important skill that we should call artificial empathy. I would go further and submit that if we wish an AI to actually possess consciousness, I believe it must, in some way, have emotions itself. A good way to probe more deeply at the link between consciousness and emotions is to look at non-human animals and see what we know.

Consciousness in Animals

I want to submit that if we seek to guess whether AI’s can acquire consciousness, we should first ask whether animals have consciousness. Let me start by saying to whatever person may be reading this blog post: I believe that you, my friend, have consciousness. Except for screwy solipsists, we all accept that “inside” every fellow human’s head, consciousness resides that is not unlike one’s own consciousness. But in truth, we have no hard evidence for this besides our empathy. So should we use empathy and extend the belief of consciousness to animals? Arguably, people with pets like dogs and cats will definitely insist that their pet has consciousness. Why? For one thing, they see behavior that is immediately understood as resulting from similar emotions to ones that they themselves have. They find it ridiculous when ethologists would rather say an animal is displaying “predator avoidance” than say it “feels fear”. They don’t find it anthropomorphic to say their pet “feels fear”, they find it common sense and believe that their pet not only has feelings, but also consciousness. Our language in talking about these issues is not very helpful. Consider the string of words: emotion, feeling, awareness, consciousness. Note the phrases: we “feel emotions”, we are “aware of our feelings,” we say we possess “conscious awareness,” phrases that link each consecutive pair of words in this string. In other words, standard English phrases link all these concepts and make sloppy thinking all too easy. One also needs to be cautious: in our digital age, many elderly people are being given quite primitive robots or screen avatars as companions and such patients find it easy to mistakenly ascribe true feelings to these digital artifacts. So it’s tempting to say we simply don’t know whether non-human animals feel anything or whether they are conscious. Or we might hedge our bets and admit that they have feelings but draw the line at their having consciousness. But either way, this is a stance that one neuroscientist, Jaak Panksepp, derides as terminal agnosticism, closing off discussion on a question that ought to have an answer.

It is only recently that both emotions and consciousness have gained the status of being legitimate things for scientific study. In the last few decades animal emotions have been studied in amazing detail through endless hours of patient observation as well as testing. Both Frans de Waal’s book referred to above and Jaak Panksepp’s book (op.cit.) detail an incredible variety of emotional behavior, in species ranging from chimpanzees to rats and including not just primary emotions but some of the above secondary emotions (for instance, shame and pride in chimps and dogs). Given the extensive organ-by-organ homology of all mammalian brains, I see no reason to doubt that all mammals experience the same basic emotions that we do, although perhaps not so great a range of secondary emotions. And if we all share emotions, then there is just as much reason to ascribe consciousness to them as there is to ascribe consciousness to our fellow humans. This is a perfect instance of “Occam’s Razor”: it is by far the simplest way to explain the data.

The world becomes much more recognizable with the advent of predation, bigger animals eating smaller ones and all growing shells for protection, all this in the Cambrian age 540-485 mya. Now we find the earliest vertebrates with a spinal cord. But we also find the first arthropods with external skeletons and the first cephalopods, predators in the phylum mollusca who grew a ring of tentacles and who, at that time, had long conical shells (see below an image of a reconstruction of the cephalopod Orthoceras from the following Ordovician age). In all three groups, there are serious arguments for consciousness. One approach is based on asking what animals feel pain and that feeling pain implies consciousness. There are experiments in which injured fish have been shown to be drawn to locations where there is a pain killer in the water, even if this location was previously avoided for other reasons. And one can test when animals seek to protect or groom injured parts of their bodies: some crabs indeed do this whereas insects don’t. (See Godfrey-Smith’s book, pp. 93-95 and references in his notes). Unfortunately, this raises issues with boiling lobsters alive, an activity common to all New Englanders like myself. Damn. Another approach is the mirror test — does the animal touch its own body in a place where its mirror image shows something unusual. Amazingly, some ants have been reported to pass the mirror test, scratching themselves to remove a blue dot that they saw on their bodies in a mirror.

With octopuses, we find animals with brain sizes and behavior similar to that of dogs. Godfrey-Smith quotes the second-century Roman naturalist Claudius Aelianus as saying “Mischief and craft are plainly seen to be characteristic of (the octopus“. Indeed, they are highly intelligent and enjoy interacting and playing games with people and toys. They know and recognize individual humans by their actions, even in identical wetsuits. As well as Godfrey Smyth’s book, one should read Sy Montgomery’s best seller The Soul of an Octopus: A Surprising Exploration into the Wonder of Consciousness. Their brains have roughly the same number of neurons as a dog, though, instead of cerebellum to coordinate complex actions, they have large parts of their brains in each tentacle. This is not unlike how humans use their cerebral cortex in a supervisory role, letting the cerebellum and basal ganglia take over the detailed movements and simplest reactions. If you can read both these octopus-related books and not conclude that an octopus has just as much internal life, as much awareness and consciousness as a dog, I’d be surprised. The most important point here is that there is nothing special about vertebrate anatomy, that consciousness seems to arise in totally distinct phyla with no common ancestor after the Cambrian age.

My personal view is that all the above also suggests that consciousness is not a simple binary affair where you have it or you don’t have it. Rather, it is a matter of degree. This jibes with human experience of levels of sleep and of the effects of many drugs on our subjective state. For example, Versed is an anesthetic that creates a half conscious/half unconscious state. As our brains get bigger, we certainly acquire more capacity for memories but some degree of memory has been found for example in fruit flies. When the frontal lobe expands, we begin making more and more plans, anticipating and trying to control the future. But even an earthworm anticipates the future a tiny bit: it “knows” that when it pushes ahead, it will feel the pressure of the earth on its head more strongly and that this not because the earth is pushing it backwards, My personal belief again is that some degree of consciousness is present in all animals with a nervous system. On the other hand, Tolkien and his Ents notwithstanding, I find it hard to imagine consciousness in a tree. I have read that their roots grow close enough to recognize the biochemical state in their neighbors (e.g. whether the neighbor tree is being attacked by some disease) but it feels overly romantic to call this a conversation between conscious trees.

The Experience of Time and Consciousness

I want to go back to the initial question of whether AIs can have consciousness. Much of this last section will annoy many people who read it: I need to cross a further bridge and talk about things that are usually classed not merely as philosophical but as religious or spiritual. I do not want to be “terminally agnostic”. Religion has been characteristic of human society since its earliest beginnings and, except for the occasional atheist, has been a central part of everyone’s life until some point in the 20th century. With the rise of modern medicine, doctors have replaced ministers as the principle go-to persons when illness strikes and, as I said above, today’s intelligencia pays little attention to religion. But I cannot respect rabid atheists like Richard Dawkins who disrespect this entire history and the people who lived it.

Although sentience, that is sensing the world and acting in response to these sensations, together with the corresponding brain activity, is often considered an essential feature of consciousness, I don’t believe that. I believe that an experienced Buddhist meditator can put his or her self in a state where they wipe their mind clean of thoughts and then experience pure consciousness all by itself, free of the chatter and clutter that fills our minds at all other awake times. Accepting this, consciousness must be something subtler than the set of particular thoughts that we can verbalize, the bread and butter of lab experiments on consciousness (e.g. Dehaene’s work). I can’t say I have experienced this, though I tried a bit. But it makes sense to me because of some times when I started on this path and found some measure of mental peace and quiet. I propose instead that the experience of the flow of time is the true core of consciousness, somewhat in the vein of Eckhart Tolle’s “The Power of Now”. It rests on the idea that experiencing the continual ever changing fleeting present is something we experience but that no physics or biology explains. It is an experience that is fundamentally different from and more basic than sentience and is what makes us conscious beings.

I want to quote from the two most famous physicists in order to amplify this idea. Firstly, Newton, in his Principia states:

Absolute, true, and mathematical time, of itself, and from its own nature flows equably without regard to anything external,

OK, this is indeed a good description of what time with its present moment feels like to us mortals. We are floating down a river — with no oars — and the water bears us along in a way that cannot be changed or modified. But now Einstein totally changed this world view by introducing a unified space-time whose points are events with a specific location and specific time. He asserted that there is no physically natural way of separating the two, no way to say two events are simultaneous when they occur in different places or that two events took place in the same location but at different times. Therefore, there is nothing in physics that corresponds to Newton’s time. He was, however, fully aware that people experience what Newton was describing and wondered if this, with its notion of the present, could have a place in physics.

Einstein said that the problem of the Now worried him seriously. He explained that the experience of the Now means something special for man, something essentially different from the past and the future, but that this important difference does not and cannot occur within physics. That this experience cannot be grasped by science seemed to him a matter of painful but inevitable resignation. He suspected that there is something essential about the Now which is just outside of the realm of science.

Yes, yes, yes, that’s what I’m talking about! How wonderful to hear it from Einstein.

Where does this leave our discussion in the last section? I don’t want to suggest that sentience has nothing to do with consciousness. I think the two are highly correlated and that Buddhist monks perform a sort of mental gymnastics. I want to list the properties that I have argued for, properties that circumscribe to some extent what consciousness is:

- Consciousness is a reality that comes to many living creatures sometime around birth and leaves them when they die, creating a feeling of “moving” from past to future along a path in space-time as well as feeling sensations, emotions and their body movements.

- Consciousness has degrees, varying from utterly vivid (e.g. positive feelings like love and negative feelings like pain) to marginal awareness. The brain has, moreover, an unconscious as well as a conscious part, activities, even thoughts, that do not reach consciousness..

- Consciousness occurs in many creatures including, for instance, octopuses as well as mankind.

- Consciousness gives us the belief that we have free will, that we can make choices that change the world. This has some relationship to quantum mechanics.

- Consciousness is not describable by science, it is a reality on a different plane.

Points 1. and 5. follow Einstein’s comments quoted above, points 2. and 3. are the ideas from the previous section (and from Dehaene’s book Consciousness and the Brain). Point 4. was one of the main issues discussed in my earlier blog and on which I hope to write later (see e.g. Stapp’s book Mindful Universe). I think it fair to say that religions are unanimous in embracing points 1. and 5. and saying that consciousness, at least in humans, results from some sort of spirit enlivening, quickening our body, as Michaelangelo depicts it:

My own version of this is to say: consciousness results from “spirit falling in love with matter”. Why love? This is a metaphorical way of expressing the intensity of consciousness and the associated will-to-live that seems universal among animals. “Love” is just the human way of saying the spirit forges an awfully tight bond with the matter in which dying is painful. The main point is that if you accept that experiencing time cannot be explained by science, but occurs in a definite and not random fashion, then it seems that this experience has to come from somewhere. So again, Occam’s Razor suggests the simplest path is to use a word proposed by all religions and call it spirit. This is really a minimalist approach, not based on any personal revelation.

My own version of this is to say: consciousness results from “spirit falling in love with matter”. Why love? This is a metaphorical way of expressing the intensity of consciousness and the associated will-to-live that seems universal among animals. “Love” is just the human way of saying the spirit forges an awfully tight bond with the matter in which dying is painful. The main point is that if you accept that experiencing time cannot be explained by science, but occurs in a definite and not random fashion, then it seems that this experience has to come from somewhere. So again, Occam’s Razor suggests the simplest path is to use a word proposed by all religions and call it spirit. This is really a minimalist approach, not based on any personal revelation.

So the question with which this post is titled then becomes: what would make a robot seem like a likely place for the spirit to want to quicken? Except possibly for pantheists, no one believes that rocks are aware. Everything I’ve written in this post suggests that the robot somehow had better have authentic emotions, one way or another, for this to happen. Quite a challenge.

Leave a comment